Training an AI/ML-Based Detection Algorithm for 3D Imaging Systems

The tech industry is inundated with buzzwords anytime the words "algorithm", "automated", or "advanced technology" are used. We are used to hearing about Machine Learning, Deep Learning, and Artificial Intelligence in all types of content ranging from dire warnings of machine takeover to efficient life improving technologies. So, at a high level, what does it actually take to develop an algorithm to detect potential threat objects surrounded by clutter, possibly shielded, or otherwise entangled with benign/normal objects?

One straightforward example is the desire and investment in deploying detection algorithms in the travel and cargo industries. The development of AI/ML-based detection algorithms to identify explosives, weapons, illegal drugs, lithium batteries, or smuggled wildlife items (like ivory, bones, fossils, or even live specimens) requires a systematic and meticulous approach. Creating an AI/ML-based detection algorithm for 3D imaging systems involves a series of critical steps where assumptions, missteps, or ineffective approaches at any single step in the process can greatly impact and reduce the effectiveness of the deployed solution down the development line.

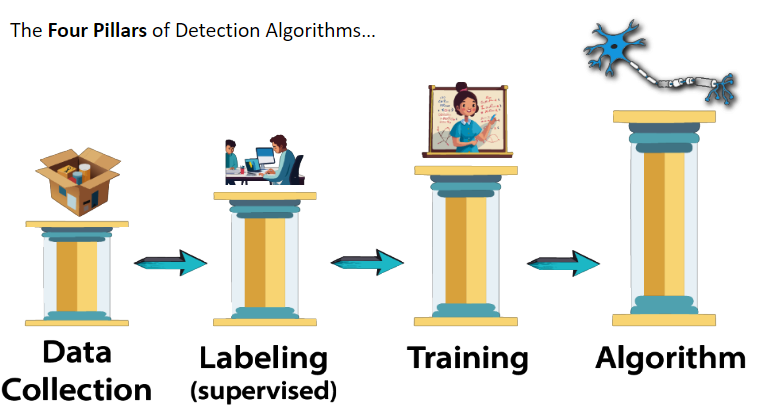

Let's explore each step and explain, at a high level, what it means for an organization and how to best support these activities. Here, we'll outline the key steps involved in the process: data collection, image object segmentation, model training and selection, algorithm development, and testing and validation and highlight how an organization might set themselves up for success in the effort.

1. Data Collection

Data collection is the foundational step in training any AI/ML model. This step can often be the most dangerous and manually intensive as it requires collection or representative objects suitable for training the algorithm to find what you intend to find once deployed. This means, if you're looking for sharp knives, guns and ammunition, or even bombs and explosives, you need to collect these objects in a safe manner an on the same imaging equipment you plan to use in the field. For a 3D imaging system:

- Source of Data: Collect 3D scans from various security checkpoints, baggage screenings, or controlled environments. Ensure diversity in object types, shapes, orientations, and environments. In aviation security, this means collecting a wide array of images of different shapes and sizes of luggage and including as wide a variety of contents in that luggage as possible to mimic real world travelers. Until synthetic data has demonstrated a consistent 1:1 accuracy (which will be a major breakthrough for the whole industry), the collection of physical data requires an intensive investment in time, hours, and meticulous planning and organization.

- Labeling Data: Manually label the objects within the 3D scans. This involves marking explosives, weapons, drugs, batteries, and wildlife items within each scan and ensuring these labels are consistent and accurate. This is a time-consuming process but critical for supervised learning. Categorizing the appropriate objects as threats, and ensuring another person can open the scan and follow the labeling protocol to find the correct object, is very important to ensuring your algorithm doesn't train on the wrong object and start classifying lipstick as C4 for example.

Important to Remember: Ensure the dataset is balanced. This easy to say and may feel like a no-brainer, but it's essential that data collection efforts do everything they can to check for bias, challenge assumptions, and establish objective criteria for the data collection process. A dataset skewed towards a particular threat, or always using the same type of "clutter", or unbalanced and containing a vastly higher number of non-threat items can lead to a model that performs poorly in real-world scenarios where the detection of rare items is crucial. Getting this process done right the first time saves time and substantial resources from having to return to recollect to address gaps or issues in the dataset.

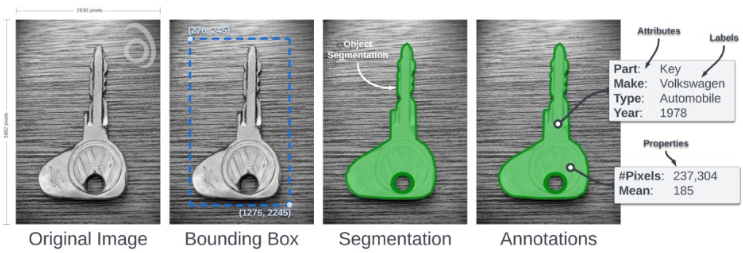

2. Image Object Segmentation (Data Preparation)

Object segmentation is the process of partitioning a digital image into multiple segments (sets of pixels) and essentially highlighting, outlining, or creating a mask of the object of interest. If you imagine a CT scan of a human body to be used in a detection algorithm meant to find tumors, you would need to segment the tumor as completely and accurately as possible. This is what the ML/AI algorithm will learn to detect through the various training stages by understanding what you are telling it to look for.

- Preprocessing: Review and clean the data to ensure it meets file format requirements and is as close to the "real world" deployed versions of the data as possible. If all you collect and train on is pristine laboratory quality images, but then are expected to work just as well on machines "out in the wild" with years of operation and less than perfect operating environments, the algorithm you train having been spoiled in it's development will not translate well to the images with blurring, fuzziness, streaks, or other artifacts that may be present in seasoned equipment.

- Segmentation Techniques: Employ algorithms like thresholding, clustering, or neural networks designed for 3D image segmentation to identify and separate the objects of interest. This process is absolutely crucial with a direct relation to the positive detection and false positive detection rates of your algorithm. Mistakenly segmenting non-threat objects or benign objects will skew your algorithm to think of those objects as worth detecting. Alternatively, failing to completely segment threat objects can limit your algorithm's ability to truly classify them if the configuration varies out in the real world.

Important to Remember: Use advanced segmentation techniques and tools that include automated and semi-automated quality assurance elements tailored for 3D images to enhance accuracy. Traditional 2D techniques can often fall short in capturing the complexity of 3D data, so working with experienced users and tools with a proven track record will be important. Object Segmentation, like Data Collection, should be viewed as a critical foundation step to preparing your algorithm for the best performance potential.

3. Model Training and Selection

Training involves feeding the prepared data into a machine learning model and tuning it to improve performance:

- Selecting Algorithms: Start with a range of algorithms including CNNs, Recurrent Neural Networks (RNNs), and Transformers. For 3D data, 3D CNNs are particularly effective.

- Training: Use a large, labeled dataset to train the model. Split the dataset into training and validation sets to monitor performance and prevent overfitting. This step can be difficult depending on the success and the volume of the data collection effort, but will be essential for (internal to the organization) performance scoring and monitoring through the development process. Some projects or use cases will be interested not only in the class by class detection rate and overall false positive rate, but also the ability of the algorithm to generalize to wider varieties of threats that may not be exactly represented in the dataset.

- Hyperparameter Tuning: Adjust parameters like learning rate, batch size, and the number of layers to optimize the model's performance across the different layers as needed to optimize the end stage performance.

Important to Remember: Leverage transfer learning by using pre-trained models on similar tasks. This can significantly reduce the amount of data and computation required to achieve high performance. Additionally, over time an organization can improve it's efficiency by using lower performing algorithms on new datasets to establish initial segmentations, and then clean up those segmentations to a higher quality afterwards.

4. Algorithm Development

Once you've trained the model(s), develop the detection algorithm:

- Integration: Integrate the trained model(s) into a real-time detection system. Ensure it can process 3D images and provide immediate feedback to meet whatever integration and regulatory requirements necessary. This may be a simple as integrating with an OEM system to augment their product, but will still require conformance to their interfacing needs. Alternatively, in an Open Architecture environment, this could require compliance with a file format standard (see DICOM or DICOS) or an integration platform like the Open Platform Software Library (OPSL) with the TSA or an API in other industries.

- Optimization: Optimize the algorithm for speed and efficiency. Use techniques like model pruning and quantization to reduce computational load without sacrificing accuracy. Be aware of the operational needs of the customer and end user. Some areas may be fine for the algorithm to take 10 or 15 minutes to process, while other industries require detection outputs in a couple of seconds. Optimization needs to take into consideration speed AND hardware resource allocation. Ensuring you have options to fine tune and balance speed and available hardware resources will ensure your algorithm has options on where it can be used.

- Threshold Setting: Define the detection thresholds that balance sensitivity and specificity. Lower thresholds can increase sensitivity but may also increase false positives.

Important to Remember: Implement a robust feature extraction mechanism that can handle variations in object shapes, sizes, and orientations. This ensures the model's effectiveness across diverse real-world scenarios. Data collection cannot always account for every conceivable configuration, arrangement, or scenario out in the world. Ensure your algorithm is prepared to generalize without severely impacting it's positive detection and false detection rates.

5. Testing and Validation

Extensive testing and validation are crucial to ensure the algorithm's reliability and robustness. Part of this step requires adequate data collection not just to train, but also to build testing and validation sets that are represented in the training data and also differ enough to help gauge an algorithm's ability to generalize. Training an algorithm only to a test or certification standard may help pass it into deployment in the short term, but long term enables bad actors to find and exploit weaknesses.

- Performance Metrics: Use metrics like accuracy, precision, recall, and F1 score to evaluate performance. Focus on minimizing false positives and false negatives.

- Real-world Testing: Test the algorithm in real-world environments and scenarios as best as you can. This helps identify potential shortcomings that might not be evident in controlled settings. When using non-threat luggage or healthy patient scans, you want to try to utilize data from the field with a wide array of variables. For luggage, that means using scans from hot and cold weather climates, men women and children, and long distance and short distance flights alike. For the medical field, you want as wide an array of body types and demographics as you can get.

- Continuous Monitoring and Updates: Continuously monitor the algorithm’s performance post-deployment and update it as necessary. Incorporate feedback from end-users to fine-tune the model and work with the customer or regulator to gather objective field performance data whenever possible.

Important to Remember: Simulate adversarial scenarios where attempts are made to deceive the detection system. Challenge any potential bias and assumptions in the data collection process. Anything taken for granted, like the proper use of trays in the airport CT security checkpoint line, or patients being aligned properly, should be challenged and tested against. This helps in understanding the algorithm's resilience and improving its robustness against sophisticated smuggling tactics and potentially catch flaws in the screening process before the algorithm ever even deploys.

Ensuring High Detection Rates with Low False Alarms

- Diverse and Comprehensive Data Collection: A well-rounded dataset that covers a wide range of scenarios is critical. It helps the model generalize better and detect rare items accurately. Investing in extensive planning and organization here is worthwhile.

- Advanced 3D Image Segmentation: Utilizing cutting-edge segmentation techniques ensures precise object identification, which is foundational for accurate detection. Sound datasets amplify performance of good algorithms.

- Transfer Learning: Employing pre-trained models can jumpstart the training process and significantly enhance performance with less data and computational resources. Don't reinvent the wheel, but check performance and adjust incrementally.

- Feature Extraction and Variation Handling: Robust feature extraction mechanisms that can account for variations in objects ensure the model's reliability in diverse scenarios. Fine tuning variables and measuring impact can give improvements in specific gaps in the detection of certain classes when applied carefully.

- Adversarial Testing: Simulating real-world smuggling attempts provides insights into the algorithm's weaknesses and helps in fortifying it against sophisticated evasion techniques.

Overall, the development of an algorithm to detect objects of interest on 3D data is a complex topic. Depending on the philosophy, tools, and approach employed by an organization, some of the above topics can carry more weight than others. Stratovan believes that each of these carries significant weight in the performance of an algorithm, especially the foundational steps of data collection and data segmentation. We hope this article shared some insights on the various steps that go into making an AI/ML-based detection algorithm.

If you are intersted in a demonstration or learning more about Stratovan's Segmentation software suite, please see visit this link: https://www.stratovan.com/request-a-demo

If you're interested in how Stratovan can build you a detection algorithm, or partner with your team to improve a detection algorithm, reach out to us here: https://www.stratovan.com/plan-project